Getting to Know Gstreamer, Part 3

Intro

In Part 2 of this series, I covered the basics of pipeline construction. Now I'll get into a more real-world example with audio, video, and subtitles.Muxing and Demuxing

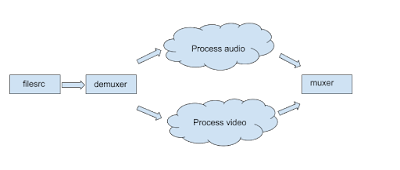

Our source MPEG-4 file contains both audio and video and we want to output both to a web video player. I'll save the details of playing a video over the web for a later post, because first I want to focus on how we use Gstreamer to process both audio and video in the same pipeline. Each requires separate elements.

Consider this pipeline spec, which I pretty-printed for clarity. In order for Gstreamer to parse it, you would have to remove the numbers and remove or escape all the linefeed characters.

- filesrc name=vidsrc location=my-video.mp4 ! decodebin name=demuxer

- mpegtsmux name=muxer

- demuxer. ! audioconvert ! audioresample ! faac ! muxer.

- demuxer. ! x264enc ! muxer.

- muxer. ! some element that accepts an MPEG-4 stream

This pipeline is broken up into five top-level "elements" that are numbered. Pipelines can be composed hierarchically out of elements, bins, and smaller pipeline fragments, and they all have virtually identical semantics for the purpose of composition.

I could have combined several of these top-level elements, but they will get combined anyway after the pipeline is constructed, and I prefer clarity, especially when it doesn't impact performance.

Also, while I haven't tested every combination, I believe that it's possible to arrange these top-level elements in any order. The Gstreamer parser doesn't care if a name like "muxer." appears in an element before it is actually defined, as long as it is defined. So the order is arbitrary, but I chose it for clarity.

We've already seen #1 in Part 2. The new part is that I assigned the name "demuxer" to the decodebin element. We can refer to it later is "demuxer." with a dot on the end.

#2 declares a multiplexer element for assembling MPEG files and assigns the name "muxer" to it.

#3 and #4 specify top-level elements for processing the audio and video streams respectively. As before, Gstreamer takes care of discovering what source pads the demuxer is exposing, and then connecting audioconvert's and x264enc's sink pads the the appropriate demuxer source pads. Each of these top-level elements, #3 and #4, send their results to the muxer, which combines them back into an MPEG-4 stream.

Finally, in #5, the final sink, muxer sends the MPEG-4 content downstream, perhaps to a file, a webstream, or a player.

Here is an illustration of the above pipeline:

The above pipeline doesn't really accomplish anything other than to decompose the MPEG-4 file into separate audio and video tracks, then recombine them into a new MPEG-4 stream. It's sort of a glorious no-op that uses memory and CPU cycles to get back to the same starting point. But I needed to get you to this point so you will understand how I can use Gstreamer to add subtitles.

Subtitles

There are two main paths we can take to add subtitles. Since the pipeline already outputs its result as an MPEG-4 stream, we could add a subtitle track to that and be done. In that case, we would add a simple top-level element to the pipeline:

subparse location=subtitles.srt ! muxer.

subparse will read it's subtitles from a file. The latest version knows how to read 8 different formats (all can be edited and saved as text). They are:

- application/x-subtitle

- application/x-subtitle-sami

- application/x-subtitle-tmplayer

- application/x-subtitle-mpl2

- application/x-subtitle-dks

- application/x-subtitle-qttext

- application/x-subtitle-lrc

- application/x-subtitle-vtt

application/x-subtitle refers to "Subrip" or SRT format, which is one of the simplest. subparse will encode the text stream into a format suitable for an MPEG-4 stream.

However, my client's client wants the subtitles to be rendered with fade effects. I found one subtitle encoding that can include a fade effect, but its not in the above list. Even if it were, I'm not sure that fade effects even have an MPEG-4 encoding that browser players know how to handle.

My team-lead and I decided that a saner option for providing a fade effect is to composite the subtitles directly on the video image where I have control over the alpha value of the subtitle layer as each subtitle begins and ends its appearance. That way, we limit our dependency and risk-exposure to Gstreamer, and not to various browser providers and versions.

Compositing

For compositing, we will add a new top-level element to the pipeline spec:

The mixer has one source pad named "src", but can expose any number of sink pads, all named, sink_0, sink_1, and so on. These are called "request pads" because they get created in response to requests by upstream elements that want to link their source pads to the element for mixing. videomixer can create any number of such pads. (I don't know if there is a hard limit, but I only need two, and I'll venture that it's limited only by available memory).

We now redefine our pipeline spec schematically:

- videomixer name=mixer sink_0::zorder=0 sink_1::zorder=1

- filesrc location=my-video.mp4 ! decodebin name=demuxer ! mixer.sink_0

- filesrc location=subtitles.srt ! subparse ! textrender ! mixer.sink_1

- mpegtsmux name=muxer

- demuxer. ! audioconvert ! audioresample ! faac ! muxer.

- mixer. ! x264enc ! muxer. ! some element that accepts an MPEG-4 stream

The videomixer element now combines two video streams, the one from the source MPEG-4 file without subtitles, and the subtitle stream, read by subparse and rendered by textrender. Line #1 assigns a z-order to each so that the subtitles will appear on top of the original video (I sometimes refer to this track as the "action" or "dramatic" video which just means that something is moving in it, not my editorial opinion about its content).

The rest works as before.

Matching Aspect-Ratios

If I play this pipeline, the output will look like this:

The black background is one of the first frames of the action video. The checkered strip at the bottom represents the subtitle tracks transparent background. It occurs because the action video's dimensions are 960x540, whereas textrender created a video track whose height might be something more like 800x600. I haven't checked what the actual dimensions are, but it doesn't matter. They just don't match. We can also see that the width's don't match, because textrender horizontally centers its text by default, whereas the text in the picture does not look centered. It is centered, but in a smaller width that we can't view directly in this picture without adding a border.

Surprisingly, textrender does not have defined properties that would allow me to specify a height and width. However, it's not really necessary, because there is already a method for coaxing textrender's output to the right dimensions that match the action video.

textrender ! video/x-raw,width=900,height=540 ! mixer.sink_1

The new element in the middle is a capsfilter that I've already discussed in my previous post. When Gstreamer parses this part of the pipeline, it will ask textrender for a source pad that matches those stream attributes or "caps" (capabilities). textrender's doc page says this:

- name

- src

- direction

- source

- presence

- always

- details

- video/x-raw, format=(string){ AYUV, ARGB }, width=(int)[ 1, 2147483647 ], height=(int)[ 1, 2147483647 ], framerate=(fraction)[ 0/1, 2147483647/1 ]

The details part "width=(int)[ 1, 2147483647 ]" and "width=(int)[ 1, 2147483647 ]" means that width and height can be anything we ask them to be, so textrender has no trouble creating a source pad that matches this request and positions it's text accordingly. The output will now look like:

Much better! We now have a pipeline that does something more useful than demuxing and re-assembling the same MPEG-4 stream without any modification.

Pausing and Probing the Pipeline

The above pipeline spec doesn't quite satisfy me. What if my source file contains an action video with dimensions that are not 960x540? I don't want to have to re-write my pipeline spec (and Python app that parses and runs it) each time. I want to be able to probe the source video after the pipeline has been initialized and started but before the first frame is mixed with the subtitle track to find out what its dimensions are. Then I want my app to assign those dimensions to the capsfilter element, and only then allow the pipeline to continue.

First we need to add the typefind element to the pipeline and replace the static capsfilter with one we can modify:

- videomixer name=mixer sink_0::zorder=0 sink_1::zorder=1

- filesrc location=my-video.mp4 ! typefind name="typefind" ! decodebin name=demuxer ! mixer.sink_0

- filesrc location=subtitles.srt ! subparse ! textrender ! capsfilter="capsfilter" mixer.sink_1

- mpegtsmux name=muxer

- demuxer. ! audioconvert ! audioresample ! faac ! muxer.

- mixer. ! x264enc ! muxer. ! some element that accepts an MPEG-4 stream

- typefind = self.pipeline.get_by_name('typefind')

- typefind.connect("have-type", self._have_type)

- self.pipeline.get_by_name('subsrc').get_static_pad('src').add_probe(Gst.PadProbeType.BLOCK, self._pad_probe_callback)

- self.pipeline.set_state(Gst.State.PAUSED)

- def _pad_probe_callback(self, pad, info):

- pass

- def _have_type(self, typefind, prob, caps):

- '''Query video dimensions, then set caps on capsfilter, then link and play'''

- self.logger.info('in Hls::_have_type(), prob={}, caps={}'.format(prob, caps.to_string()))

- st = caps.get_structure(0)

- newcaps = Gst.Caps.new_empty_simple('video/x-raw')

- newcaps.set_value('height', st.get_value('height'))

- newcaps.set_value('width', st.get_value('width'))

- capsfilter = self.pipeline.get_by_name('capsfilter')

- capsfilter.set_property('caps', newcaps)

- self.pipeline.get_by_name('subsrc').get_static_pad('src').remove_probe(1)

- self.logger.info('Setting pipeline state to PLAYING')

- self.pipeline.set_state(Gst.State.PLAYING)

Line #4 sets a blocking-probe on the filesrc element that reads the subtitle definition file. A blocking-probe does two things: 1) it blocks the element from sending or receiving buffers, and 2) allows us to probe the elements data stream with a callback. In this case, I don't need to probe the content, so _pad_probe_callback(self, ...) does nothing. But this will prevent any processing of the subtitle stream until my pipeline is ready.

Line #5 changes the pipeline state from the NULL state (uninitialized - no resources) to PAUSED. In the PAUSED state, the pipeline is fully provisioned and ready to go. In addition, every element along the pipeline other than those that have already been blocked by a blocking probe or by its own processing logic will be "pre-rolled", meaning it is now holding onto its first buffer of content.

The filesrc that reads the subtitle file has already been blocked. There is no need to do anything similar to the action video track because the videomixer won't output anything until it has seen one buffer on each of its sink pads with in-sync timestamps, meaning that it has something to mix. When the typefind element emits its "have-type" event, and Gstreamer calls the handler _have_type(self, ...), parts of the pipeline will be pre-rolled but frozen, and we will have the content properties in the "caps" argument.

Lines #13-18 extract the caps, create a new set of caps and assign it to the capsfilter whose sink pad is connected to the textrender.src pad, thus coercing its dimensions.

I don't know why I had to create a new Gst.Caps instance instead of just assigning the "caps" argument to the capsfilter, but when I tried that the pipeline just stops without any error message. When I created a new caps instance that contained only the caps I wanted to change - the height and width - it worked. I also had to specify the content-type when I created the new caps container, but that will always be 'video/x-raw'.

Line #19 cleans up by removing the probe on the subtile file reader, allowing it to do its work.

Line #21 sets the pipeline state to PLAYING. The pipeline will transition to that state (there may be some lag until every element is in the READY state, holding a content buffer) and then play.

Conclusion

I haven't even gotten to my precious fade-effect for the subtitles yet. That's coming in a subsequent post. If you've made it this far and are still with me, hang on!